The World Wide Semantic Web (WWSW) and Web Alliance for Regreening in Africa (W4RA) on Dataversity

Some days ago Jennifer Zaino from Dataversity and myself, from DANS/eHG/VUA/WWSW had a nice discussion over what the WWSW and W4RA communities are doing to improve information sharing in challenging contexts. She then published a very good summary of this discussion on a blog post entitled “Bringing the Semantic Web and Linked Data to All” 🙂

It was a pleasure being given the opportunity to speak about our work and I hope this article will attract and motivate more people for joining our teams. The more we are thinking about those issues and acting upon them, the better and faster will be the outreach 😉

Impressions over the Data-driven Visualization Symposium

Today I was attending an event entitled “Data-driven Visualization Symposium” in the beautiful Trippenhuis building of the KNAW in Amsterdam. There was a really rich schedule with 10 speakers showcasing some of their work in the area of big data and visualisation.

Though I would have appreciated getting a bit more of the how instead of just seeing finished products, I really enjoyed the presentations. Using two very different application domains (respectively the design of logos and the exploration of massive data), Wouter van Dijk and Edwin Valentijn showed cases where information overload can be dealt with by using clever reduction techniques. The data has to be turned into something more communicative and be part of our interaction schemes, among other social, mediated and IoT interactions. But at the end of his presentation about an atlas of Pentecostalism, Richard Vijgen also rightly reminded us to be very cautious when bringing data to an audience which has not received the critical thinking training researchers receive. This population may take everything we depict for granted without questioning the graphical representation. Mirjam Leunissen followed up on this idea when showing cases of Fox News and the Utrecht University doing visuals that trick the reader (e.g. 6M depicted graphically as 2/3 of 7M). Her talk was about how visuals can be either used to convey the numbers or convey an emotion/impression, the later being more used by data journalists. She pointed to several great examples of impression rich visualisations including a view of the number of Syrian refugees, gun deaths in the US, the depth at which black boxes of a lost flight are expected to be found. To complete the showcasing of applications, Timo Hartmann and Anton Koning explained how visualisations can be used for collective decision making and mediated interactions in the civil engineering and medical domains.

In contrast to these port-folio presentations, Laurens van der Maaten and Elmar Eisemann gave two more technical talks respectively describing the t-SNE algorithm for visual clustering and explaining how common gaming techniques (frustum culling, LOD, ray tracing, …) can be combined with more advanced tricks related to the eye sensibility to provide high throughput, personalised, rendering. These techniques have been applied for rendering flooding models in the project “3DI”. A remote rendering cluster is used to ensure high-end graphics can be processed and shown on lower grade hardware.

Last but not least, Maarten van Meersbergen and Tijs de Kler gave an overview of what the eScience center has to offer in terms of hardware and expertise, welcoming the participants to make good use of both to crash test their data as early and often as possible.

As hinted a bit earlier, what I missed the most was more explanation and guidance about how to find the best way to convey a story with visual representations. Maybe also with a bit more information about the tooling to use. I also missed seeing visualisations using Wii-Us but that’s a different story 😉 Right now, I do not have a much more clearer idea on how we should visualise our census data from CEDAR than I had before attending the symposium… let’s see whether something pops up later when thinking more about everything that was shown today 🙂

Dataviz with a Wii-U (continued)

Following the discussion I had after my previous posts, here is a bit more structured explanation of the ideas:

Please feel free to ping me and/or comment on this post if you too think it’s a good idea 🙂

Why don’t we use gaming consoles for data visualisation ?

Yesterday I was sitting in a very interesting meeting with some experts in data visualisation. There was a lot of impressive things presented and the name of Wii remote and Kinect were mentioned a couple of time. As I observed so far, these devices are used as cheap way to get sensors. And they certainly deliver, in the field of user interfaces as well as for robotics there have been achievements made thanks to these peripherals. But why does nobody seem to be using the complete gaming devices? Even the research field of serious gaming shows little overlap with the console gaming industry.

I’m a fan of Nintendo so my argumentation will be a bit Nintendo-centric, but the same point could easily be made about the devices from Nintendo competitors. Developing data visualisation on a Wii-U, or a handled device like the 3DS, has the potential to save time and reach a greater audience. The development kit sold to the gaming industry are reasonably priced and give access to a consumer-product grade gaming toolkit that are just ready to use. As far as the cheap hardware argument goes, the Wii-U is rather interesting: it’s a strong GPU with HDMI output associated to a tablet with all the sensors one may expect. There are also pointing capabilities inherited from the Wii and a dedicated social network for applications running on the Wii-U. Outside of gaming, this social network is already being used on the Wii-U for social TV and will certainly be used for new incarnation of services that used to be on the Wii (Weather, Polls, …). All of this works out of the box, no need to hack new things to get on making great interactive visualisation or serious games.

Then comes the argument of coding for a dedicated platform. It is true that the Wii-U runs a dedicated operating system which can be expect to be deployed on all Nintendo’s devices but not outside of Nintendo’s realm (pretty much like Apple’s iOS !). So far, Nintendo has applied a generation-1 compatibility to his devices meaning that things developed on one generation of console will work on the next one. The Wii-U runs Wii-U and Wii software. The Wii runs Wii and GameCube software, etc… Previous iterations of the backward compatibility required dedicated additional hardware but they seemed to have stopped doing that now. Thus, looking at a new generation of gaming consoles every 6 or 7 years, this gives a 12 to 14 years stability for anything developed on one platform. Another goody is that as a developer you will not need to update your visualisation to deal with the console update that will happen over this period. Actually, things are always developed for a dedicated platform. As far as picking one such platform goes, I would rather bet on the Web platform rather than Java, Android, iOS or Flash. This is the only one focusing on open standards that everyone can implement. Applications developed with modern Web technologies can run everywhere these technologies are supported (including the Wii-U, thanks to the popular WebKit !). The Google street view application for the Wii-U has been coded in HTML5, using no native code.

In term of outreach, developing our research prototype for the hardware from the gaming industry would bring our products to the living room. That is closer to a wide, diverse, share of the people whose money is actually used to fund the (public) research. If the output of a research project can make it to the market place of a console device, everybody will be able to just download it and use it from the couch. Eventually involving other family members and, now, remotely connected friends via the integrated social networking features.

Nintendo and his competitors are working hard at bringing new entertaining and social experiences. This go well beyond the mere gaming they used to focus only a couple of years ago. Entertainment giants expect us to throw out our DVD players, media players, smart TVs and music players to just use their console and a dumb (big) screen. I think it would be a waste not to consider their hardware when we plan our research activities. Let me know if you think otherwise 😉

Data export VS Faceted expressivity

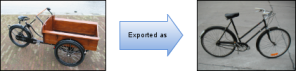

One visiting the Netherlands will inevitably stumble upon some “BakFiets” in the streets. This Dutch speciality that seems to be the result from cross-breeding a pick-up with a bike can be used from many things from getting the kids around to moving a fridge.

Now, let’s consider a Dutch bike shop that sells some Bakfiets among other things . In his information system these item will surely be labelled as “Bakfiets” because this is just what they are. This information system can also be expected to be globally be filled with inputs and semantics (table names, fields names, …) in Dutch as well. If that bike shop wants to start selling his items outside of the Netherlands there will be a need for exporting the data into some international standard so that other sellers can re-import the data it into their own information system. This is where things get problematic…

What will happens to the “bakfiets” during the export? As it does not make sense to define an international level class “bakfiets” – which can be translated to “freight bicycle“, every shop item of type “bakfiets” will most certainly be exported as being a item of type “bike”. If the Dutch shop owner is lucky enough the standard may let him indicate that, no, this is not really just a two-wheels standard bike through a comment property. But even if the importer may be able to use that comment (which is not guaranteed), the information is lost: when going international, every “bakfiets” will become a regular bike. Even more worrying is the fact that besides the information loss there is no indication of how much of it is gone.

Semantic Web technologies can be of help here by enabling the qualification of shop items with facets rather than strict types. That is assigning labels or tags to things instead of putting items into boxes. The Dutch shop will be able to express in is knowledge system that his bikes with a box are both of the specific type “bakfiets” that makes sense only in the Netherlands and are also instances of the international type “bike”. An additional piece of information present in the knowledge base will connect the two types saying the the former is a specification of the later. The resulting information export flow is as follows:

- The Dutch shop assign all the box-bikes to the class “bakfiets” and the regular bikes to the class “bike”.

- A “reasoner” infers that because all the instances of “bakfiets” are specific types of “bike”, all these items are also of type “bike”.

- Another non Dutch shop asking for instances of “bike” in the Dutch shop will get a complete list of all the bikes and see that some of them are actually of type “bakfiets”.

- If his own knowledge system does not let him store facets the importers will have to flatten the data to one class but he will have received the complete information and know how much of it will be lost by removing facets.

Beyond this illustrative example data export presents real issues in many cases. Everyone usually want to express their data using the semantic that applies to them and have to force information into some other conceptualisation framework when this data is shared. A more detailed case for research data can be found in the following preprint article:

- Christophe Guéret, Tamy Chambers, Linda Reijnhoudt, Frank van der Most, Andrea Scharnhorst, “Genericity versus expressivity – an exercise in semantic interoperable research information systems for Web Science”, arXiv preprint http://arxiv.org/abs/1304.5743, 2013